Introduction to Deep Learning

Definition of deep learning

Deep learning is a subset of artificial intelligence (AI) that involves algorithms inspired by the structure and function of the human brain, called artificial neural networks. These algorithms are designed to learn and make intelligent decisions on their own by analyzing large amounts of data. Deep learning models are able to automatically discover patterns and features within the data, without the need for explicit programming.

What sets deep learning apart from traditional machine learning approaches is its ability to work with unstructured data such as images, videos, and text. This makes it particularly well-suited for tasks like image and speech recognition, natural language processing, and autonomous driving.

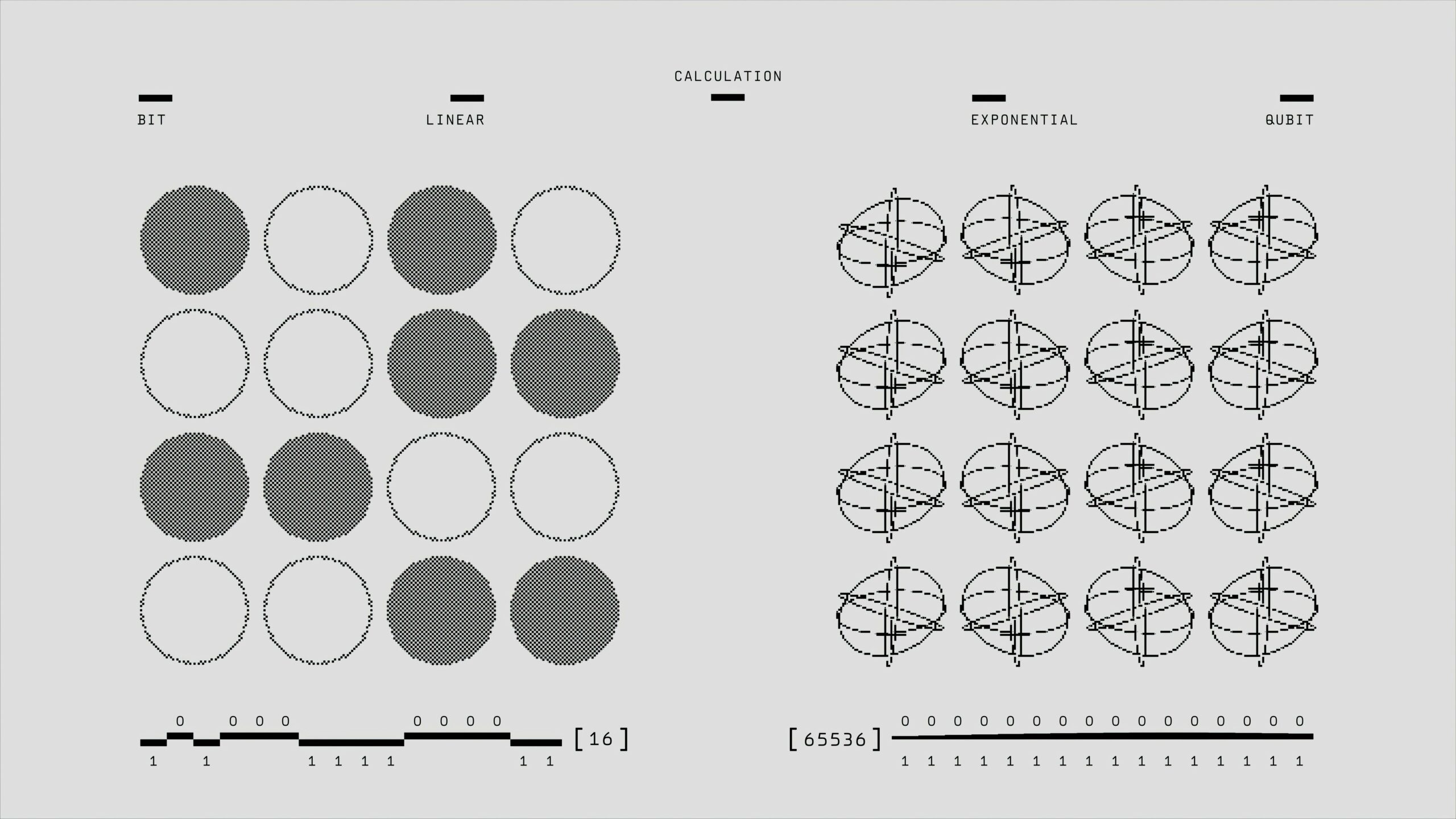

At the core of deep learning is the concept of neural networks, which are composed of layers of interconnected nodes (neurons) that process information. By adjusting the weights and biases of these connections, neural networks can iteratively learn from data and improve their performance on a given task.

Deep learning has gained significant traction in recent years due to advancements in computational power, the availability of large datasets, and improvements in algorithmic techniques. This has led to breakthroughs in various fields, from healthcare and finance to entertainment and transportation, unlocking new possibilities and transforming industries.

Brief history and development of deep learning

Deep learning, a subset of machine learning, has gained significant attention and popularity in recent years due to its ability to learn complex patterns and representations from data. The roots of deep learning can be traced back to the 1940s with the development of the first artificial neural network model, the perceptron. However, it wasn’t until the 1980s and 1990s that deep learning started to gain traction, primarily through the work of researchers such as Geoffrey Hinton, Yann LeCun, and Yoshua Bengio.

One of the key milestones in the development of deep learning was the introduction of backpropagation by Seppo Linnainmaa in the 1970s and its application to neural networks by Rumelhart, Hinton, and Williams in the 1980s. This technique allowed neural networks to efficiently learn and update their parameters during the training process, paving the way for the training of deeper and more complex models.

In the early 2000s, the availability of large datasets, increased computational power, and advancements in algorithms further propelled the progress of deep learning. Breakthroughs in image recognition, speech recognition, and natural language processing demonstrated the capabilities of deep learning models in solving real-world problems.

The deep learning revolution truly took off in 2012 when a deep convolutional neural network developed by Alex Krizhevsky, Ilya Sutskever, and Geoffrey Hinton, known as AlexNet, outperformed traditional computer vision models by a significant margin in the ImageNet competition. Since then, deep learning has become the driving force behind many cutting-edge technologies and innovations across various domains.

The continuous research and development in deep learning have led to the creation of more sophisticated architectures, improved optimization techniques, and the democratization of deep learning frameworks and tools. Today, deep learning is not only a powerful tool for building intelligent systems but also a driving force behind the advancements in artificial intelligence.

Neural Networks in Deep Learning

Explanation of neural networks

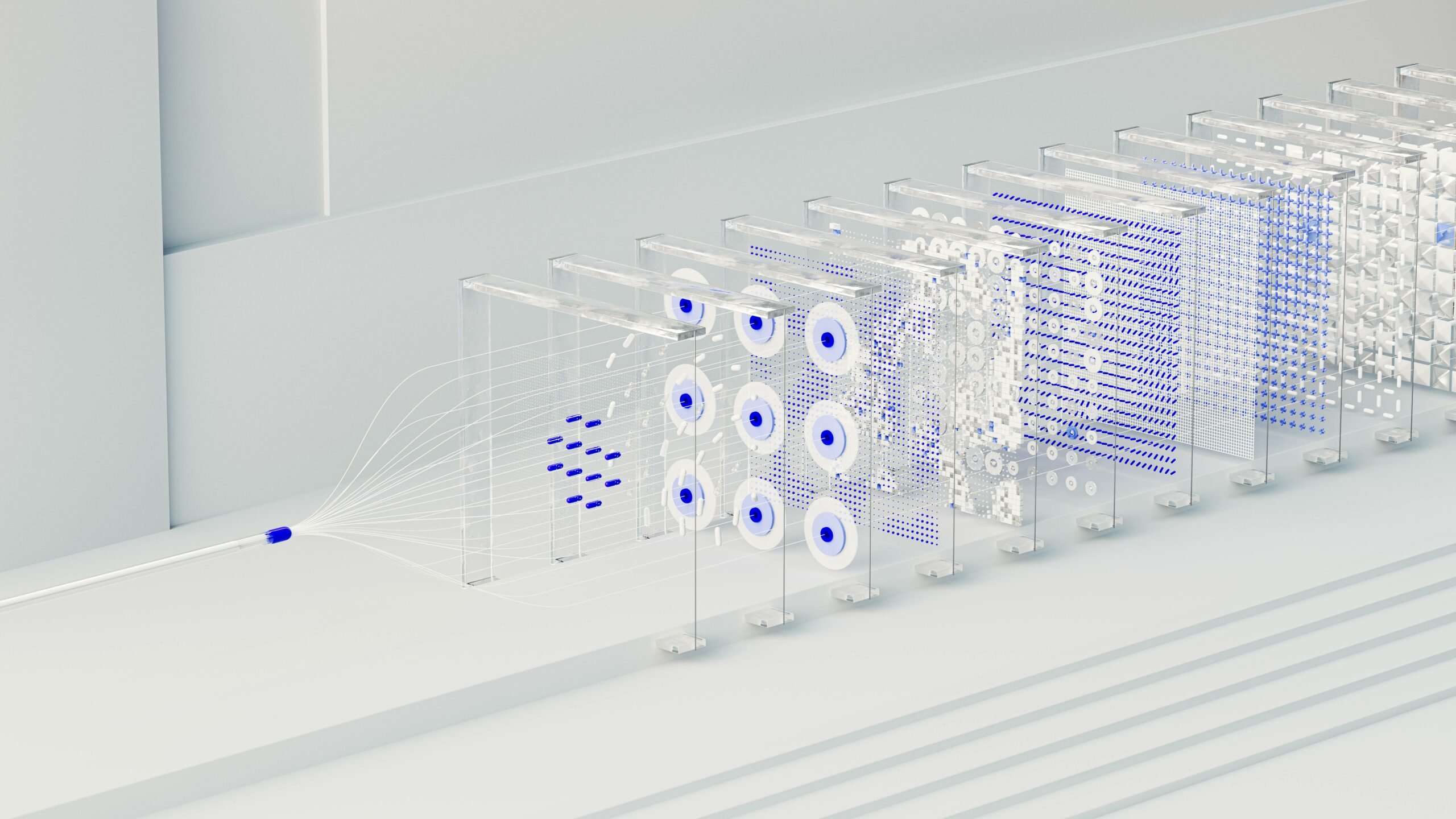

Neural networks are a fundamental component of deep learning, serving as the building blocks for processing and learning from data. Inspired by the structure of the human brain, neural networks are composed of layers of interconnected nodes, known as neurons. These neurons receive input, process it through activation functions, and produce an output.

In a neural network, information flows through interconnected layers, with each layer performing specific operations on the data. The input layer receives raw data, such as images or text, and passes it to one or more hidden layers where the data is processed. The final output layer produces the network’s prediction or decision based on the processed information.

There are various types of neural networks used in deep learning, each designed for specific tasks and data types. Convolutional Neural Networks (CNNs) are commonly used for image recognition and computer vision tasks due to their ability to effectively learn spatial hierarchies. Recurrent Neural Networks (RNNs), on the other hand, are well-suited for sequential data, making them ideal for natural language processing and speech recognition applications.

Overall, neural networks play a crucial role in deep learning by enabling the model to learn complex patterns and relationships within the data. Their versatility and capability to handle different types of data have contributed to the widespread adoption of deep learning across various industries and applications.

Types of neural networks used in deep learning (e.g., convolutional neural networks, recurrent neural networks)

In deep learning, various types of neural networks are utilized to solve different types of problems. Two commonly used neural network architectures are convolutional neural networks (CNNs) and recurrent neural networks (RNNs).

Convolutional neural networks (CNNs) are particularly well-suited for tasks involving images and visual data. They are designed to automatically and adaptively learn spatial hierarchies of features from the input data. CNNs consist of multiple layers of neurons that apply convolutional filters to input data, allowing the network to effectively capture patterns and features at different levels of abstraction. CNNs have been highly successful in tasks such as image recognition, object detection, and image segmentation.

On the other hand, recurrent neural networks (RNNs) are specialized for sequential data and are designed to capture dependencies and patterns over time. Unlike feedforward neural networks, RNNs have connections that form directed cycles, allowing information to persist and be passed from one step of the sequence to the next. This makes RNNs well-suited for tasks such as speech recognition, language modeling, and time series prediction.

Moreover, there are variations and improvements upon these basic architectures, such as long short-term memory (LSTM) networks and gated recurrent units (GRUs) for RNNs, which address the vanishing gradient problem and capture long-range dependencies more effectively.

Overall, the choice of neural network architecture in deep learning depends on the nature of the input data and the specific task at hand. Researchers and practitioners continue to explore and develop new types of neural networks to enhance the capabilities and performance of deep learning systems in various applications.

How Deep Learning Works

Training process in deep learning

Training in deep learning refers to the process of teaching a neural network to learn and make predictions based on data. This process involves feeding the network with input data, allowing it to make predictions, comparing these predictions to the actual output, and adjusting the internal parameters of the network to minimize the error.

The training process typically consists of the following key steps:

-

Initialization: Initially, the weights and biases of the neural network are set randomly. These parameters are crucial as they determine how the network processes and transforms the input data to generate the output.

-

Forward Propagation: During this step, the input data is fed into the network, and the network processes it through multiple layers of interconnected neurons. Each neuron applies a set of weights to the input data, adds a bias term, and passes the result through an activation function to introduce non-linearity.

-

Loss Computation: After the input data propagates through the network, the output is compared to the actual target output. The loss function quantifies the error between the predicted output and the actual output.

-

Backpropagation: Backpropagation is the process of calculating the gradient of the loss function with respect to the weights and biases of the neural network. This gradient is then used to update the network’s parameters in the opposite direction to minimize the loss.

-

Optimization: Various optimization algorithms, such as stochastic gradient descent (SGD) or Adam, are used to update the weights and biases of the network based on the gradients calculated during backpropagation. The aim is to find the optimal set of parameters that minimize the loss function.

-

Iteration: The process of forward propagation, loss computation, backpropagation, and optimization is repeated iteratively for a predefined number of epochs or until a stopping criterion is met. Each iteration helps the neural network to learn and improve its predictions.

Through this training process, a deep learning model learns to extract relevant features from the input data and make accurate predictions. The success of deep learning models heavily relies on the quality and quantity of the training data, the network architecture, the choice of activation functions, and the optimization algorithm used during training.

Activation functions and backpropagation

Activation functions and backpropagation are essential components in how deep learning works.

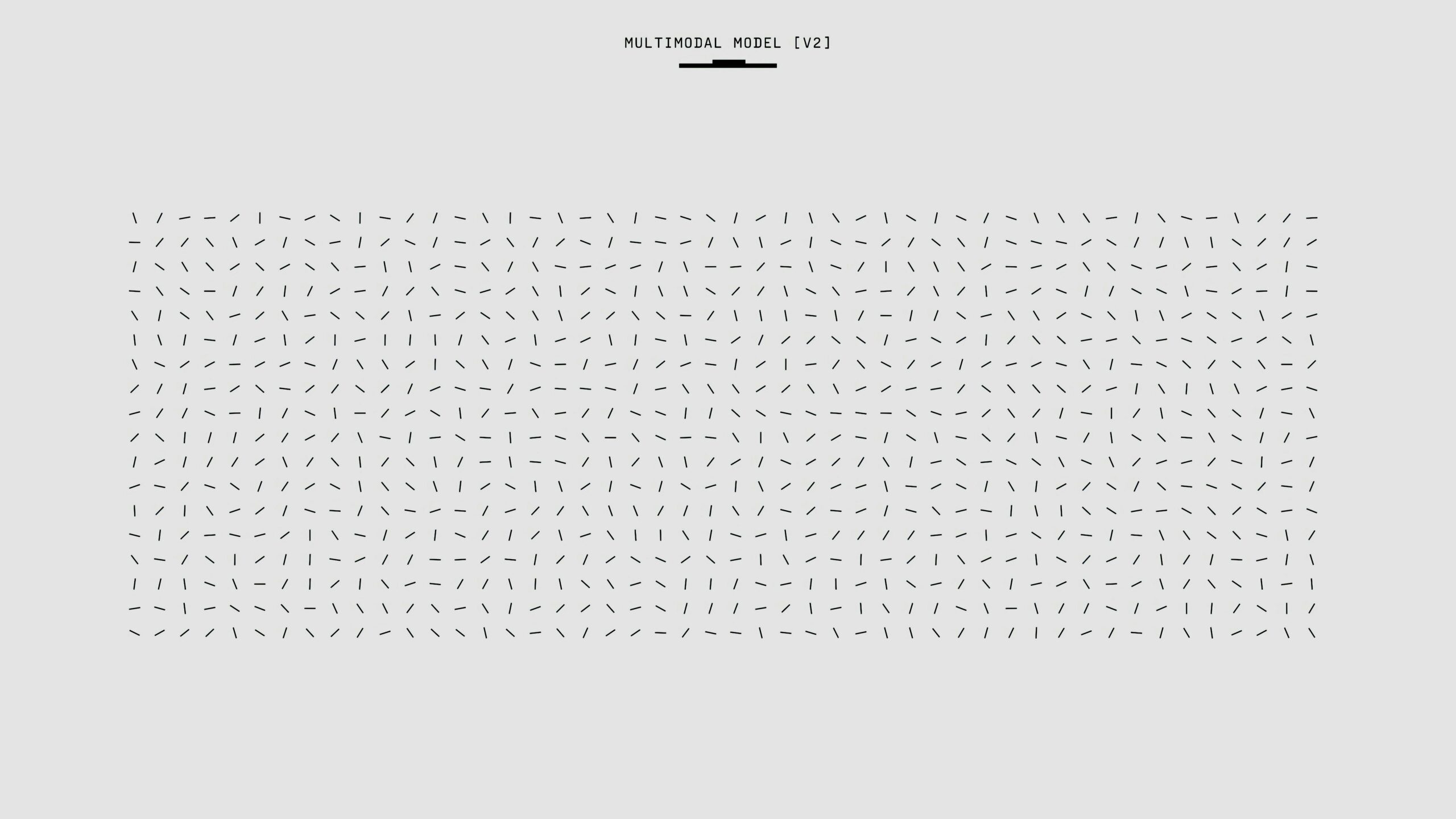

Activation functions are mathematical equations that determine the output of a neural network. They introduce non-linear properties to the network, enabling it to learn complex patterns in data. Common activation functions include sigmoid, tanh, ReLU (Rectified Linear Unit), and softmax. Each activation function has its own characteristics and is suited for different types of problems.

Backpropagation, short for „backward propagation of errors,“ is an algorithm used to train neural networks. It works by calculating the gradient of the loss function with respect to the weights of the network. This gradient is then used to update the weights in the network, reducing the error in predictions made by the model. Backpropagation is an iterative process that fine-tunes the weights of the neural network to minimize the error between predicted and actual outputs.

Together, activation functions and backpropagation form the core of deep learning algorithms. By using activation functions to introduce non-linearity and backpropagation to optimize the network’s weights, deep learning models can learn from data and make accurate predictions across a wide range of tasks.

Applications of Deep Learning

Image recognition and computer vision

Deep learning has revolutionized the field of image recognition and computer vision, enabling machines to interpret and understand visual data with incredible accuracy. One of the most well-known applications of deep learning in this domain is image classification. By training deep neural networks on vast datasets of labeled images, these models can learn to distinguish between different objects, animals, and even facial expressions in photographs.

Moreover, deep learning has significantly enhanced object detection capabilities. Convolutional neural networks (CNNs) are commonly used for this purpose, allowing machines to identify and locate multiple objects within an image. This technology has numerous practical applications, from surveillance systems that can detect intruders to self-driving cars that can identify pedestrians and obstacles on the road.

Deep learning has also been instrumental in advancing facial recognition technology. By analyzing facial features and patterns, deep neural networks can accurately recognize individuals in photos or videos. This has led to widespread adoption in security systems, law enforcement, and even social media platforms for automatic tagging of friends in photos.

In addition to image recognition, deep learning has transformed the field of medical imaging. By training neural networks on vast amounts of medical data, researchers and healthcare professionals can now detect and diagnose various conditions from X-rays, MRIs, and CT scans with remarkable precision. This technology has the potential to revolutionize healthcare by improving diagnostic accuracy and patient outcomes.

Overall, the applications of deep learning in image recognition and computer vision are vast and diverse. From improving security systems and autonomous vehicles to enhancing medical diagnostics and social media platforms, deep learning continues to push the boundaries of what is possible with visual data analysis.

Natural language processing

Natural language processing (NLP) is a field within deep learning that focuses on enabling machines to understand and interpret human language. Through the use of neural networks and other deep learning techniques, NLP has made significant advancements in various applications.

One of the key applications of deep learning in NLP is machine translation. Systems like Google Translate utilize deep learning models to accurately translate text from one language to another. These models can learn the nuances and context of different languages, resulting in more accurate translations.

Another important application is sentiment analysis, where deep learning models are used to determine the sentiment or emotion behind a piece of text. This is particularly useful for companies to analyze customer feedback, social media posts, and reviews to understand how their products or services are perceived by the public.

Additionally, chatbots and virtual assistants have greatly benefited from deep learning in NLP. These systems can now understand and respond to user queries in a more human-like manner, providing a more natural and engaging user experience.

Furthermore, deep learning has revolutionized text generation tasks, such as language modeling and text summarization. Models like GPT (Generative Pre-trained Transformer) have been able to generate coherent and contextually relevant text, leading to advancements in content creation and summarization tasks.

Overall, the applications of deep learning in natural language processing have transformed the way we interact with machines and have opened up new possibilities for communication, automation, and information retrieval.

Speech recognition

Speech recognition is one of the most significant applications of deep learning technology. It involves the process of converting spoken language into text, allowing computers to understand and interpret human speech. Deep learning models, particularly recurrent neural networks (RNNs) and transformers, have revolutionized the field of speech recognition by significantly improving the accuracy and efficiency of transcription systems.

One of the key challenges in speech recognition is handling variations in accents, intonations, and background noise. Deep learning models excel in capturing these nuances and can adapt to different speaking styles, making them highly versatile in real-world applications.

Speech recognition technology has been integrated into various products and services, such as virtual assistants (e.g., Siri, Alexa, Google Assistant), voice-activated smart devices, dictation software, and customer service automation. These applications have transformed the way we interact with technology, enabling hands-free operation and enhancing accessibility for users with disabilities.

Furthermore, deep learning has enabled advancements in the development of speech-to-text systems for different languages, dialects, and domains. This has been particularly beneficial in areas such as transcription services, language translation, and subtitling for videos, making content more accessible and inclusive to a global audience.

Overall, the applications of deep learning in speech recognition have not only streamlined everyday tasks but have also opened up new possibilities for communication, accessibility, and user experience across various industries and sectors.

Challenges and Limitations of Deep Learning

Overfitting and underfitting

Overfitting and underfitting are two common challenges in deep learning that can significantly impact the performance and generalization of a model.

Overfitting occurs when a model learns the training data too well, to the point that it performs poorly on new, unseen data. This can happen when the model is too complex relative to the amount of training data available, leading it to memorize noise or outliers in the training set rather than capturing the underlying patterns. Overfitting can be mitigated by techniques such as regularization, dropout, and early stopping, which help prevent the model from becoming overly complex and over-reliant on the training data.

On the other hand, underfitting happens when a model is too simple to capture the underlying patterns in the data, resulting in poor performance both on the training data and unseen data. Underfitting can occur when the model is not complex enough to learn the true relationships in the data, or when the training process is stopped too early. To address underfitting, one can consider using a more complex model, increasing the training duration, or improving the quality of the input features.

Finding the right balance between overfitting and underfitting is crucial for developing deep learning models that can generalize well to new data and make accurate predictions. Researchers and practitioners in the field continually work on developing new techniques and methodologies to mitigate these challenges and improve the overall performance and robustness of deep learning models.

Need for large amounts of labeled data

Deep learning, while a powerful tool for solving complex problems, often requires a large amount of labeled data to train models effectively. Labeled data refers to input data that has been tagged with the correct output. For example, in a deep learning model designed to classify images of cats and dogs, each image in the dataset would need to be labeled as either a cat or a dog.

The need for labeled data poses a significant challenge for many applications of deep learning. Acquiring and preparing large amounts of labeled data can be time-consuming, labor-intensive, and expensive. In some cases, it may be difficult or even impossible to obtain a sufficient quantity of high-quality labeled data, particularly for niche or specialized domains.

Furthermore, the quality of the labeled data is crucial for the performance of deep learning models. Errors or inconsistencies in the labels can lead to inaccuracies in the trained model, impacting its ability to generalize to new, unseen data. This issue is especially relevant in applications where labeling data is subjective or open to interpretation.

Addressing the need for large amounts of labeled data is an active area of research in the field of deep learning. Techniques such as transfer learning, semi-supervised learning, and active learning have been developed to make more efficient use of limited labeled data. Transfer learning, for example, involves leveraging pre-trained models on large datasets and fine-tuning them on smaller, domain-specific datasets to achieve good performance with less labeled data.

Despite these advancements, the requirement for labeled data remains a significant bottleneck for many deep learning applications. Overcoming this challenge will be essential for realizing the full potential of deep learning across a wide range of domains and industries.

Interpretability and transparency issues

Interpretability and transparency are significant challenges in the field of deep learning. As deep learning models become more complex and sophisticated, understanding how they make decisions becomes increasingly difficult. This lack of interpretability can be a hindrance in critical applications such as healthcare and finance, where it is essential to know why a model made a particular prediction or decision.

One of the reasons for the lack of interpretability in deep learning models is their black-box nature. These models often involve millions of parameters and layers, making it challenging to trace how a specific input leads to a particular output. As a result, it becomes difficult to explain the rationale behind the decisions made by these models, leading to a lack of trust from end-users and regulatory bodies.

Transparency issues also arise when considering the data used to train deep learning models. Biases present in the training data can be inadvertently learned by the model, leading to biased predictions or decisions. Without proper mechanisms in place to identify and mitigate these biases, deep learning models can perpetuate and even exacerbate societal inequalities.

Addressing interpretability and transparency issues in deep learning is crucial for the widespread adoption of these models in sensitive domains. Researchers are exploring techniques such as model explainability algorithms and interpretable machine learning models to shed light on the decision-making process of deep learning models. Additionally, efforts to ensure the fairness and accountability of these models through rigorous testing and validation procedures are underway to mitigate potential risks associated with their opaqueness.

In the future, advancements in research and technology are expected to improve the interpretability and transparency of deep learning models, making them more trustworthy and reliable for a wide range of applications. Fostering transparency and interpretability in deep learning will not only enhance the societal acceptance of these models but also pave the way for their responsible deployment in critical domains.

Future Directions in Deep Learning

Advances in deep learning research

Advances in deep learning research have been rapidly evolving, pushing the boundaries of what is possible with artificial intelligence. One of the key areas of focus in current research is improving the efficiency and scalability of deep learning models. Researchers are exploring new architectures, algorithms, and techniques to make deep learning models faster, more accurate, and more adaptable to different tasks and domains.

Another important direction in deep learning research is the development of more interpretable and explainable models. As deep learning models become more complex and are applied to critical decision-making processes, there is a growing need to understand how these models arrive at their predictions. Researchers are working on techniques to increase the transparency of deep learning models, allowing users to have better insight into the decision-making process of these systems.

Additionally, there is a rising interest in exploring the intersection of deep learning with other fields such as reinforcement learning, meta-learning, and transfer learning. By combining deep learning with other machine learning approaches, researchers aim to create more robust and versatile AI systems that can generalize better to new tasks and environments.

Overall, the future of deep learning research holds exciting possibilities for advancing the capabilities of artificial intelligence and unlocking new applications across various industries. Continued innovation and collaboration in the field are expected to drive further breakthroughs in deep learning technology, paving the way for a future where AI plays an increasingly prominent role in our daily lives.

Potential areas for growth and innovation

One of the potential areas for growth and innovation in deep learning is the integration of different types of neural networks. While convolutional neural networks (CNNs) and recurrent neural networks (RNNs) have been widely used in various applications, there is a growing interest in developing hybrid models that combine the strengths of different network architectures.

For example, researchers are exploring the combination of CNNs and RNNs to improve the performance of tasks that require both spatial and temporal processing, such as video analysis and action recognition. By integrating these two types of networks, it is possible to create more powerful models that can capture complex patterns in data more effectively.

Moreover, there is increasing interest in developing neural networks that can learn more efficiently from limited labeled data. Few-shot learning and zero-shot learning techniques are being actively researched to enable neural networks to generalize to new tasks with very few labeled examples. This area of research has the potential to significantly reduce the data requirements for training deep learning models, making them more accessible and applicable to a wider range of problems.

Another direction for growth in deep learning is the development of more interpretable and explainable models. As deep learning models become increasingly complex, there is a growing need to understand how they make predictions and decisions. Researchers are exploring techniques to enhance the interpretability of neural networks, such as designing models that provide explanations for their outputs or incorporating mechanisms that allow users to inspect and understand the internal representations learned by the network.

In addition, the field of deep learning is expanding to new domains beyond traditional applications in computer vision, natural language processing, and speech recognition. Researchers are exploring the use of deep learning in areas such as healthcare, finance, and climate science, where large amounts of data can be leveraged to make predictions, optimize processes, and discover insights. The potential for deep learning to drive innovation in these domains is vast, with opportunities to develop specialized models and algorithms that can address specific challenges and create value in diverse industries.

Ethical Considerations in Deep Learning

Bias and fairness issues

Bias and fairness issues in deep learning have gained significant attention in recent years as AI technologies become more prevalent in various aspects of society. One of the primary concerns is the presence of biases in training data, which can lead to biased decision-making processes by AI systems. These biases can stem from historical data that reflect societal inequalities or from the data collection methods themselves.

Moreover, the lack of diversity in the teams developing AI algorithms can also contribute to biases in the technology. If the teams working on AI projects do not represent a wide range of backgrounds and perspectives, it can result in overlooking potential biases that may impact marginalized communities.

Furthermore, the black box nature of deep learning models poses challenges for ensuring transparency and accountability in decision-making processes. Understanding how these models arrive at specific conclusions is crucial for identifying and addressing any biases present in the system.

Addressing bias and fairness issues in deep learning requires a multi-faceted approach. It involves diversifying the teams working on AI projects, implementing unbiased data collection methods, and developing algorithms that prioritize fairness and transparency. Additionally, ongoing monitoring and auditing of AI systems can help identify and mitigate biases as they arise.

As deep learning continues to shape various industries and aspects of society, it is essential to prioritize ethical considerations to ensure that AI technologies are developed and deployed in a fair and unbiased manner. By addressing bias and fairness issues, we can harness the full potential of deep learning while mitigating potential harms and promoting a more inclusive and equitable future.

Privacy concerns

Privacy concerns are a significant ethical consideration in the field of deep learning. The use of deep learning technologies often involves the collection and analysis of vast amounts of data, including personal information. This raises concerns about the protection of individuals‘ privacy and the potential misuse of sensitive data.

One of the primary privacy concerns in deep learning is data security. As deep learning systems rely on large datasets to train and improve their performance, there is a risk of data breaches and unauthorized access to personal information. Ensuring the security of data storage and transmission is crucial to protecting individuals‘ privacy.

Another privacy issue is the potential for algorithmic bias in deep learning systems. Biases in the data used to train these systems can lead to discriminatory outcomes, especially in sensitive areas such as hiring, lending, and criminal justice. Addressing and mitigating bias in deep learning algorithms is essential to uphold principles of fairness and non-discrimination.

Furthermore, the lack of transparency in deep learning algorithms can also pose privacy concerns. Black-box algorithms that are difficult to interpret raise questions about how decisions are made and whether individuals have control over the use of their data. Implementing mechanisms for transparency and accountability in deep learning systems is essential for maintaining trust and respecting individuals‘ privacy rights.

Overall, addressing privacy concerns in deep learning requires a multidisciplinary approach that considers technical, legal, and ethical considerations. By implementing privacy-enhancing technologies, promoting data protection regulations, and fostering transparency and accountability, the ethical implications of deep learning on privacy can be effectively managed.

Conclusion

Recap of key points about deep learning

In conclusion, deep learning is a subset of artificial intelligence that has gained significant attention and traction in recent years due to its ability to learn and make decisions on its own from large amounts of data. It is a powerful tool that has revolutionized various industries such as healthcare, finance, and transportation.

Throughout this chapter, we have explored the definition of deep learning, its history and development, the role of neural networks, the training process, popular applications such as image recognition and natural language processing, as well as the challenges and limitations it faces.

Despite its advancements and successes, deep learning is not without its challenges, including overfitting, the need for large amounts of labeled data, and concerns around interpretability and transparency. Ethical considerations such as bias, fairness, and privacy also need to be carefully addressed as deep learning continues to evolve and integrate into society.

Looking ahead, the future of deep learning holds exciting possibilities with ongoing research advancements and potential growth in diverse fields. It is crucial for stakeholders to navigate these developments responsibly, considering the ethical implications and societal impact of deep learning technology.

In summary, deep learning represents a transformative force that is reshaping how we approach problems and innovate solutions. Its importance and potential impact across various industries make it a critical area of study and investment for the future.

Importance and potential impact of deep learning in various industries

Deep learning has emerged as a powerful tool with the potential to revolutionize various industries. Its impact is already being felt in areas such as healthcare, finance, transportation, and many more. By harnessing the capabilities of deep learning, industries can optimize processes, improve decision-making, and unlock new possibilities that were previously unimaginable.

In healthcare, deep learning is being used for medical image analysis, drug discovery, personalized treatment plans, and disease diagnosis. This technology has the potential to enhance patient care, reduce medical errors, and ultimately save lives.

In finance, deep learning is employed for fraud detection, algorithmic trading, risk management, and customer service. By analyzing vast amounts of data in real time, financial institutions can make more informed decisions, improve customer experience, and mitigate risks effectively.

In transportation, deep learning is revolutionizing autonomous vehicles, traffic management, predictive maintenance, and route optimization. These advancements have the potential to increase safety on the roads, reduce traffic congestion, and provide more efficient transportation solutions.

The potential impact of deep learning transcends these industries and extends to fields such as agriculture, retail, manufacturing, and entertainment. By leveraging the power of deep learning algorithms, businesses can gain valuable insights, streamline operations, and deliver enhanced products and services to their customers.

As deep learning continues to evolve and mature, its importance in various industries is expected to grow exponentially. Companies that embrace this technology and incorporate it into their operations stand to gain a competitive advantage, drive innovation, and shape the future of their respective sectors. In conclusion, the potential impact of deep learning in various industries is profound, promising a future where intelligent systems drive efficiency, productivity, and transformation across the board.